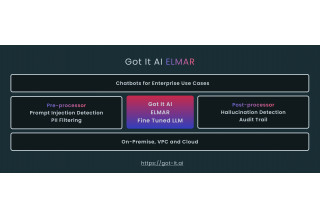

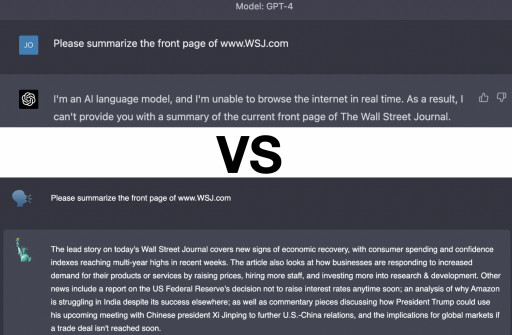

PALO ALTO, Calif., March 30, 2023 (Newswire.com) - Got It AI announced today its enterprise-ready LLM named ELMAR (for Enterprise Language Model ARchitecture). ELMAR is an order of magnitude smaller than GPT-3 and can run on premise with integrations to any knowledge base for dialog-based chatbot Q&A applications. Key innovations in the architecture enable commercial use because the ELMAR is not based on Facebook Research's LLaMA or Stanford Alpaca. Additionally, truth checking on responses and post-processing to prevent the user from seeing incorrect responses lowers the final incorrect response rate seen by the user to a very low level. ELMAR can run on low-cost hardware compared to very large language models which require expensive hardware, and will be available for pilots with enterprise beta testers who sign up at https://www.got-it.ai/elmar.

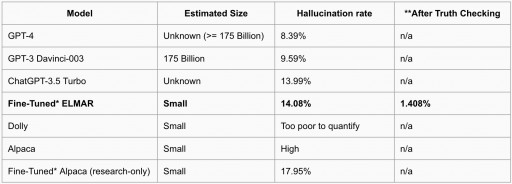

In addition, Got It AI is publishing results of LLM hallucination rates based on a 100-article dataset with a Q&A test set for GPT-4, GPT-3, ChatGPT, GPT-J/Dolly, Stanford Alpaca and Got It AI's own ELMAR. Got It's Truth Checker AI, capable of catching 90% of hallucinations, announced earlier in January, was used to compare the hallucination rates, and the results were validated by humans. A playground to test TruthChecker functionality with this data set and test set will be made available at https://www.got-it.ai/truthchecker.

Hallucination Rate Test Results (Model, Size, Hallucination Rate, **After Truth Checking)

- GPT-4, > 175 B, 8.39%, n/a

- GPT-3 Davinci-003, 175 B, 9.59%, n/a

- ChatGPT-3.5 Turbo, unknown, 13.99%, n/a

- Fine-tuned* ELMAR, small, 14.08%, 1.408%

- Dolly, small, performed too poorly to measure, n/a

- Alpaca, small, high hallucination rate, n/a

- Fine-tuned* Alpaca (research only), small, 17.95%, n/a

* ELMAR and Alpaca are fine-tuned on the 100-article data set since these models are significantly smaller than OpenAI GPT-3 and GPT-4. Fine-tuning on OpenAI models is not considered because they already have been trained on large conversational data sets, and fine-tuned large OpenAI models are not as cost-effective to run as Alpaca and ELMAR. We attempt to compare equivalent price/performance of models to the greatest extent possible.

** After Truth Checking percentage assumes 90% catch rate and prevention of sending response to user.

"Recently, it was suggested that smaller and older models like GPT-J can deliver ChatGPT-like experiences. In our experiments, we did not find this to be the case. Despite fine-tuning, such models performed significantly worse than other more advanced models. It is not just about the data, but also about modern model architectures and training techniques," said Chandra Khatri, Head of Conversational AI Research at Got It AI. "In our testing, smaller open source LLMs perform poorly on specific tasks unless they are fine-tuned on target datasets. For example, when we used Alpaca, an open source model, for a Q&A task on our target 100-article set, it resulted in a significant fraction of answers being incorrect or hallucinations, but did better after fine-tuning. ELMAR, when fine-tuned on the same dataset, produced accurate results, equivalent to ChatGPT-3."

"Enterprises are shutting off access to ChatGPT because they want guardrails. The key guardrails we're offering are in two critical areas: a truth checking model on the responses generated, and a pre-processing model for filtering out sensitive information sent to dialog-based chatbots," said Peter Relan, Chairman of Got It AI. "While LLaMA and Alpaca are research constrained licenses, our work enables commercial use. The ability to run ELMAR on premise creates a sense of control and safety around the use of generative AI models in the enterprise. It opens up a number of chatbot use cases involving potentially sensitive data: Internal Knowledge Base Queries, Customer Service Agent Assist, IT and HR Helpdesks, Customer Support, Sales Training, and many more. The availability of a solution for generative AI hallucinations is another key factor enabling enterprise adoption."

Key Features and Benefits of on-prem ELMAR

- Pre-processor for removing PII and preventing attacks on the Language Model

- ELMAR can be fine-tuned on a GPU and run cost-effectively on smaller GPU

- Truth Checker can be fine-tuned on a GPU and run cost effectively on a smaller GPU

- Integrations to Knowledge Bases enable dialog-based chatbot solutions

Media Enquiries: Contact Peter Brooks at [email protected]

Contact Information:Peter Brooks

[email protected]

David Chu

[email protected]

4082212176

Related Images

Original Source: Got It AI Announces ELMAR, a Commercial-Use On-Prem LLM for Enterprises, With Guardrails Including Hallucination Checking

The post Got It AI Announces ELMAR, a Commercial-Use On-Prem LLM for Enterprises, With Guardrails Including Hallucination Checking first appeared on The Offspring Session.

Art and Entertainment - The Offspring Session originally published at Art and Entertainment - The Offspring Session